Google unveils Gemini Robotics-ER 1.5 to power advanced embodied reasoning

The AI model is meant to give developers broad access to advanced spatial, temporal, and tool-calling capabilities for physical agents.

Google has released Gemini Robotics-ER 1.5, its latest embodied reasoning AI model for robotics, making the technology broadly available to developers for the first time. Optimized as a high-level “brain” for physical agents, the new model is designed to tackle real-world robotics tasks that combine complex perception, reasoning, and planning.

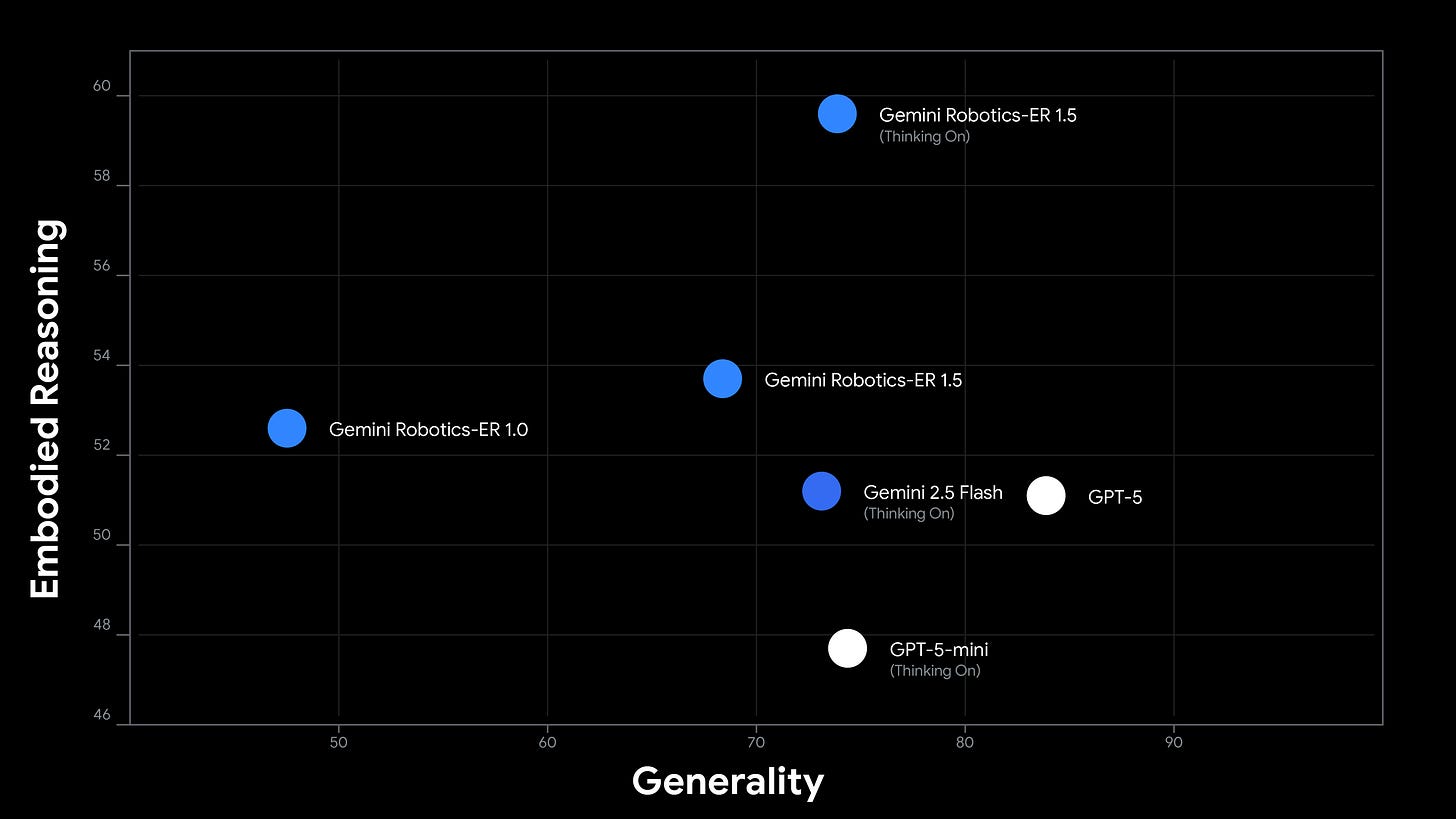

The company says Gemini Robotics-ER 1.5 is purpose-tuned to produce state-of-the-art results on both academic and internal benchmarks, serving as the core reasoning component in Google’s broader Gemini Robotics system.

Gemini Robotics-ER 1.5 is built to interpret natural language commands, analyze visual input, generate spatial plans, and choreograph multi-step actions for robots. Its architecture is specifically tuned for embodied reasoning, focusing on challenges such as understanding objects in context, managing task sequences, and estimating progress as actions unfold. “You can think of Gemini Robotics-ER 1.5 as the high-level brain for your robot. It can understand complex natural language commands, reason through long-horizon tasks, and orchestrate sophisticated behaviors,” the company stated.

A key feature of the model is its spatial understanding, enabling precise localization of objects and their states within a scene. Gemini Robotics-ER 1.5 can translate user prompts—such as pointing out items in a kitchen or tracking the state of a container across video frames—into accurate spatial coordinates, informing a robot’s subsequent motion plan. The model is also capable of temporal reasoning, processing sequences of actions over time and outputting step-by-step task breakdowns with timestamp granularity.

Google highlights the model’s ability to integrate information from various sources. Gemini Robotics-ER 1.5 can call external tools including Google Search for context-specific guidance and can activate subordinate models—such as vision-language-action (VLA) models or third-party user-defined functions—to execute the full pipeline from perception to actuation.

Demonstration examples provided by Google include instructing a robot to clean up a table, identifying where to place a mug to prepare coffee, or sorting trash using location-specific recycling guidelines sourced from the internet. In each scenario, Gemini Robotics-ER 1.5 generates plans grounded in visual data and available knowledge bases, dynamically breaking down complex requests into a series of actionable steps.

The company notes that Gemini Robotics-ER 1.5 supports configurable “thinking budgets,” enabling developers to balance the trade-off between latency and reasoning performance for different application demands. “This allows developers to balance the need for low-latency responses with high-accuracy results for more challenging tasks,” the post explains.

In line with industry safety standards, Google emphasizes that its enhanced filtering and safeguards in Gemini Robotics-ER 1.5 are designed to supplement, not replace, traditional robotics safety practices. The company advocates for a layered approach, calling it a “Swiss cheese approach,” to risk mitigation.

Gemini Robotics-ER 1.5 is now accessible via Google AI Studio and the Gemini API, marking a significant step in making advanced robotic reasoning capabilities available to a wider developer audience.